Machine Learning Foundations Through Q&A

Introduction

In this article, we will review the basics of Machine learning & Deep learning in the form of Q&A. Consider this as some sort of FAQs on ML & DL when someone new to the field is trying to understand. We will explore key concepts, applications, and common terminologies to provide a solid foundation for anyone starting their journey into the exciting world of ML and DL. I was reading the fastai book and found this Q&A sort of writing quite helpful. It is as if I am reasoning myself and it gives me a moment to think about the article that is coming up instead of reading through a heading and understanding the content in it. (Some of my own pedagogy!)

Q: What is regular programming?

A: Regular programming is a method to make computers accomplish a particular task by providing them with precise step-by-step instructions.

Example: Let’s say we want to write a program to add two numbers together. In regular programming, we would need to explicitly write down the steps, such as taking input for the two numbers, performing the addition, and displaying the result.

Regular programming can be represented as below. A program which takes in input and provides resulttts.

Q: What is machine learning, and how does it differ from regular programming?

A: Instead of explicitly instructing the computer with precise steps, machine learning allows the computer to learn from examples and figure out how to solve problems on its own.

Example: In regular programming, if we want a computer to identify images of dogs, we would need to write detailed code telling it how to recognize specific features of a dog. However, in machine learning, we would provide the computer with a large dataset of images labeled as “dog” or “not dog,” and it would learn from those examples to identify dogs without explicit instructions.

Q: When did machine learning concept come about?

Arthur Samuel was an IBM researcher who, in 1949, pioneered the concept of machine learning. He proposed the idea of training computers by showing them examples of a problem and allowing them to learn how to solve it on their own, rather than explicitly programming the steps.

The key concepts in Samuel’s idea of machine learning include:

- “Weight assignment”: Representing variables with values that the computer can adjust during the learning process.

- “Actual performance”: Evaluating the performance of the computer’s current weight assignment based on its results.

- “Automatic means of testing”: Having a mechanism to automatically evaluate the computer’s performance without human intervention.

- “Mechanism for improving performance”: Implementing an automatic process to adjust weight assignments to enhance the computer’s performance.

Q: How does the term “model” differ from the traditional concept of a “program” in machine learning?

A: The term “model” is used to describe a special kind of program. Unlike traditional programs that follow specific, fixed steps to complete a task, a machine learning model can perform multiple tasks and make decisions based on its internal weights or model parameters. These parameters define how the model behaves and can be adjusted to adapt its behavior.

Here is the updated high level representation of machine learning. As you can see, we have swapped out Program with Model and included Weights which control the behaavior of the model.

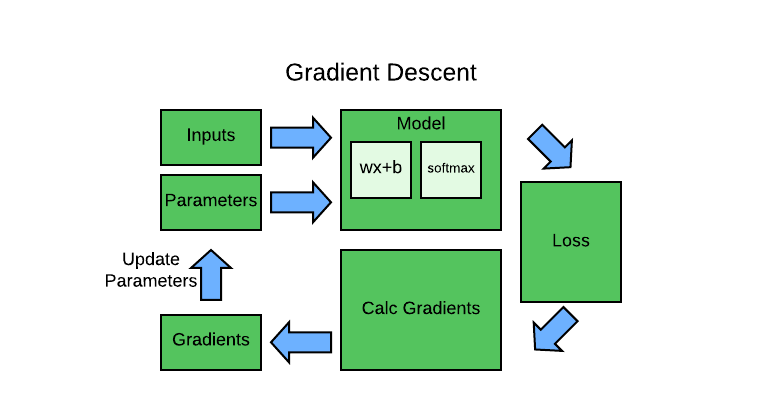

Q: How does the model learn optimal weights (behavior)?

A: Arthur Samuel identified two key components to make machine learning automatic and enable the system to “learn” from its experience:

- Automatic means of testing: A method to evaluate the current weight assignment’s effectiveness in terms of actual performance. In the case of Samuel’s checkers program, this would involve testing how well the model plays against other models.

- Mechanism for weight adjustment: An automated process to alter the weight assignment to improve the model’s performance. The adjustments are based on the comparison between models, where the winning model’s weights influence the updates.

Q: What happens to the weights of a machine learning model after it has been trained?

A: After a machine learning model has been trained and the final, best weight assignment has been selected, the weights become fixed and constant. At this point, the training process is complete, and the model is ready for real-world use. The selected weights become an integral part of the trained model, as they determine how the model will make predictions or decisions on new, unseen data.

Example: Consider a trained machine learning model for image recognition, specifically identifying cats and dogs. During the training process, the model’s weights are adjusted based on a large dataset of labeled images of cats and dogs, allowing the model to learn to differentiate between them. Once the training is finished and the optimal weights are chosen, the model becomes a stable system with fixed weights. Now, when you input a new image, the model will use its fixed weights to classify it as either a cat or a dog without changing the weights any further.

The below shows a representation of a trained model. As we can see, this resembles our earlier regular program representation. This is an important insight: a trained model can be treated just like a regular computer program.

Q: How has the terminology evolved in modern deep learning compared to Arthur Samuel’s time?

A: In modern deep learning, the terminology has evolved as follows:

- Architecture: The functional form of the model is referred to as its “architecture.” It represents the overall structure of the model and how the different layers are interconnected.

- Parameters: The weights in the model are now called “parameters.” These parameters are the numerical values that the model learns during the training process and influence the model’s behavior.

- Independent Variable: The data used for making predictions, excluding the labels, is known as the “independent variable.” It serves as input to the model.

- Predictions: The results produced by the model based on the given inputs are still referred to as “predictions.” These predictions represent the model’s output.

- Loss: The measure of performance, indicating how well the model’s predictions match the correct labels, is now called the “loss.” The goal is to minimize the loss during training to improve the model’s accuracy.

- Labels/Targets/Dependent Variable: The correct labels used during training to compute the loss are known as “labels,” “targets,” or the “dependent variable.” These labels represent the ground truth values for the input data.

Example: In a modern deep learning model for image classification, the “architecture” defines the layers and connections in the neural network. The “parameters” are the weights that the model learns from the data. The “independent variable” is the image data fed into the model for prediction. The “predictions” are the model’s outputs, like classifying the image as a “dog” or “cat.” The “loss” quantifies how accurate the predictions are compared to the correct “labels” (e.g., “dog” or “cat”) during the training process.

Q: What are some fundamental limitations inherent to machine learning?

A: Some fundamental limitations of training deep learning models are as follows:

-

Data Dependency: A model cannot be created without data. To train a deep learning model effectively, a substantial amount of relevant data is required. The model learns from this data to identify patterns and make predictions.

-

Limited Learning Scope: A deep learning model can only learn to recognize and operate on the patterns that are present in the input data used for training. If certain patterns or variations are missing from the training data, the model may struggle to handle unseen data effectively.

-

Prediction-Oriented: Machine learning, including deep learning, focuses on making predictions based on the data it has been trained on. It doesn’t inherently provide recommended actions or strategies for decision-making.

-

Need for Labeled Data: To train a deep learning model, not only is input data necessary, but also labeled data. Labels indicate the correct outputs for the input data, and the model learns from the correlation between the input and labels to make accurate predictions.

Example: Suppose we want to use deep learning to build an image classifier that can distinguish between pictures of dogs and cats. To achieve this, we require a large dataset of images of dogs and cats, along with the corresponding labels indicating which pictures are dogs and which are cats. The model will learn to recognize features and patterns in the labeled data and use that knowledge to predict whether a new image contains a dog or a cat. Without labeled data, the model would lack the information it needs to make accurate predictions.

Q: What is overfitting and how does it occur during the training process?

A: Overfitting is a common issue in machine learning, including deep learning, where a model performs extremely well on the training data but fails to generalize well on new, unseen data. It occurs when the model memorizes specific patterns and noise present in the training data rather than learning the underlying general patterns that apply to unseen data.

Explanation: During the training process, the model tries to minimize its error or loss on the training data, and over time, it becomes better at predicting the training examples. However, if the model is trained for too long, it may start memorizing the training data, including noise and outliers, instead of learning the essential features that are genuinely relevant to the task. As a result, the model’s performance on the validation set (unseen data) may deteriorate because it has become too specialized in the training data.

Example: Suppose we have a deep learning model to identify handwritten digits. During the training process, the model gets better at recognizing the digits in the training dataset, achieving high accuracy on this data. However, if the model is trained for an extended period, it might start memorizing specific examples from the training set, including slight variations in writing styles and noise. As a consequence, when tested on new handwritten digits that it has not seen before, the model’s accuracy might decrease significantly, indicating that it is overfitting to the training data.

What is a Validation Set?

Validation Set: Essential for training a model, the validation set is a separate dataset used to evaluate the model’s accuracy on unseen data. Measuring accuracy on the validation set helps prevent overfitting, where the model becomes too specialized in the training data and fails to generalize well to new data.

What is a Test Set?

A: In addition to the training and validation sets, there is a third dataset called the test set. The test set is used to assess the final performance of the trained model. Once the model has been trained and its parameters have been optimized using the training set and evaluated on the validation set, the final evaluation of the model’s performance is done on the test set. The test set provides an unbiased estimate of how well the model will perform on new, unseen data in real-world scenarios. It helps ensure that the model’s performance is reliable and not biased towards the data it was trained on.

Q: Why are deep learning models hard to understand, particularly compared to simpler models like linear regression?

A: Deep learning models are difficult to understand because of their “deep” structure, containing hundreds or thousands of layers. Unlike simpler models like linear regression, where we can easily determine the importance of input variables based on their weights, deep neural networks have complex interactions between neurons and hidden layers, making it challenging to identify the factors influencing their predictions.

Q: What is the Universal Approximation Theorem in the context of neural networks, and what are its practical limitations?

A: The Universal Approximation Theorem states that neural networks have the ability to theoretically represent any mathematical function. In other words, given enough complexity and depth, neural networks can approximate any function to a desired degree. However, in practice, there are limitations due to the availability of data and computational resources, making it impossible to train a model to represent all functions.

Example: Imagine you have a neural network with a large number of layers and neurons. According to the Universal Approximation Theorem, this network could approximate any function, whether it’s linear, nonlinear, or complex. However, practically, you may not have access to enough data to train the network for all possible functions, and the computational power required to optimize such a complex model may be infeasible. So, while the theorem highlights the theoretical capabilities of neural networks, practical considerations constrain their ability to approximate all functions in real-world scenarios.

Q: What are the essential components required for building and training a deep learning model?

A: To build and train a deep learning model, you need the following components:

-

Architecture: You must choose an appropriate architecture or structure for the model, which involves selecting the type and arrangement of layers and neurons.

-

Data: You need a dataset to feed into the model as input, representing the examples on which the model will learn.

-

Labels: For most deep learning use-cases, you require labeled data, where each input data point is associated with the correct output or target value. Labels are used to compare the model’s predictions during training.

-

Loss Function: A loss function is necessary to quantitatively measure how well the model’s predictions match the true labels. It represents the discrepancy between the predicted output and the actual target.

-

Optimizer: To improve the model’s performance, you need an optimizer that updates the model’s parameters based on the loss function’s evaluation. The optimizer adjusts the model’s weights to minimize the loss and enhance its predictive capabilities.

Example: Suppose we want to build a deep learning model for image classification to differentiate between cats and dogs. We select a convolutional neural network (CNN) as the architecture, with layers designed to recognize visual patterns. We collect a dataset of labeled images of cats and dogs. The images serve as input data, and their corresponding labels (cats or dogs) are used during training to compare the model’s predictions. We choose a loss function, such as categorical cross-entropy, to quantify the difference between predicted probabilities and true labels. With an optimizer, like stochastic gradient descent (SGD), we update the model’s parameters (weights) in each training iteration to minimize the loss and improve the model’s ability to classify new images correctly.

Q: What are pretrained models, and why are they useful in deep learning?

A: Pretrained models are deep learning models that have been trained on large datasets for tasks other than the current one. For instance, image recognition models might be pretrained on the ImageNet dataset with 1000 classes. These models are useful because they have already learned to recognize simple features like edges and colors, which can be beneficial in various tasks.

Example: Suppose we want to build a model to classify different types of animals, but we don’t have a large dataset for this specific task. Instead of training the model from scratch, we can use a pretrained image recognition model trained on the ImageNet dataset. This model has already learned to detect edges, colors, and other basic visual features, making it a good starting point for our animal classification task. However, since the pretrained model was not trained on the exact animal classification task, we may need to fine-tune or adapt it to perform well on our specific problem.

Q: How do you adapt a pretrained model for a specific task, and what are the “head” layers in this context?

A: When using a pretrained model, the early layers have already learned general features from the original training task (e.g., edge and color detection in an image recognition model). However, the later layers are more specialized and specific to the original task, making them less useful for the new task.

To make the pretrained model work for the new task, you discard the later layers and add new layers that match the requirements of your dataset and target task. These new layers become the “head” of the model. You can then train the model on your specific dataset with the new head while keeping the pretrained weights of the early layers, enabling the model to leverage the previously learned general features.

Example: Suppose you have a pretrained image recognition model that was originally trained on the ImageNet dataset. You want to use this model for a specific task, such as classifying different species of birds. In this case, you remove the later layers of the pretrained model, which were specific to ImageNet’s 1000 classes. Then, you add new layers to the model, sized appropriately for your bird classification task. These new layers form the “head” of the model, and you train the model on your bird dataset using the pretrained weights from the early layers and random weights in the head layers. This process allows the model to quickly adapt to your bird classification task while benefiting from the general visual features learned in the early layers.

Q: What types of features do the earlier and later layers of a deep learning model typically learn?

A: In a deep learning model, the earlier layers tend to learn simple features like diagonal, horizontal, and vertical edges. As we move to the later layers, the model learns more advanced features, such as car wheels, flower petals, and even outlines of animals.

The hierarchical nature of deep learning allows it to learn progressively more abstract and meaningful features as the data moves through the layers of the network.

Q: What is the role of the architecture in a machine learning model?

A: The architecture of a machine learning model serves as the template or structure that the model follows. It defines the mathematical model that the machine learning algorithm tries to fit to the data.

In machine learning, the architecture specifies the arrangement of layers, nodes, and connections in the model. For example, in a neural network, the architecture determines the number of layers, the number of neurons in each layer, and how the neurons are interconnected. Each layer performs specific computations on the data, transforming the input data into meaningful representations. The architecture plays a crucial role in determining the complexity and capacity of the model, which affects its ability to learn and generalize to new data.

Q: What are hyperparameters in the context of training machine learning models, and how do they impact the training process?

A: Hyperparameters are the parameters that define how a machine learning model is trained, but they are not learned during the training process itself. Instead, they are set before training and can significantly affect the model’s performance.

Explanation: Hyperparameters are distinct from the model’s parameters, which are learned during training (e.g., the weights and biases in a neural network). Hyperparameters, on the other hand, control various aspects of the training process. Examples of hyperparameters include the number of epochs (how many times the model sees the entire training dataset), the learning rate (how fast the model parameters are updated during training), the batch size (number of samples used in each training iteration), and the size of the hidden layers in a neural network.

Tuning hyperparameters is an essential part of model development, as selecting appropriate values can significantly impact the model’s ability to learn and generalize. It often involves experimentation and validation to find the optimal hyperparameter settings for a given task.

Example: In training a neural network for image classification, we need to set hyperparameters like the number of epochs, learning rate, and batch size. If we choose too few epochs, the model might not converge to the best performance. If the learning rate is too high, the model might oscillate or diverge during training. These hyperparameters can have a substantial impact on the model’s training progress and ultimate accuracy, so it’s crucial to fine-tune them for optimal results.

Q: Why is a GPU (Graphics Processing Unit) preferred over a CPU (Central Processing Unit) for deep learning tasks?

A: A GPU is more suitable for deep learning due to its parallel processing capabilities, optimized memory bandwidth, and specialized hardware for tensor operations. It can efficiently handle large-scale matrix computations, making it well-suited for deep learning tasks.

Explanation: The main differences between a GPU and a CPU (Central Processing Unit) lie in their designs and functionalities:

- Parallel Processing: GPUs are designed to perform massive parallel processing. They consist of thousands of small cores capable of handling multiple tasks simultaneously. This parallelism makes GPUs well-suited for the matrix operations involved in deep learning, where many calculations can be performed at the same time.

- Vectorized Operations: GPUs are optimized for vectorized operations, allowing them to execute a single instruction on multiple data elements simultaneously. This feature is advantageous in deep learning, where large datasets are processed in parallel.

- Memory Bandwidth: Deep learning models often involve large amounts of data, and GPUs have higher memory bandwidth compared to CPUs. This allows them to access and move data more efficiently during computations, reducing the bottleneck caused by memory access.

- Specialized Hardware: Many modern GPUs are equipped with specialized hardware and tensor cores that accelerate common deep learning operations, such as matrix multiplications used in neural network training.

- Performance-to-Cost Ratio: GPUs offer better performance-to-cost ratios for deep learning tasks compared to CPUs. They provide substantial computational power at a relatively lower cost, making them popular choices for researchers and practitioners.

On the other hand, CPUs are general-purpose processors designed to handle a wide range of tasks efficiently, but they are not optimized for parallel computations like GPUs. In a typical deep learning workload, where large matrix multiplications and activation functions dominate, CPUs might be slower and less effective due to the following reasons:

- Limited Parallelism: CPUs have a smaller number of more powerful cores designed for sequential processing. While they can execute a wide variety of tasks efficiently, they lack the parallel processing capabilities of GPUs.

- Memory Bottleneck: For deep learning tasks, CPUs might struggle to provide enough memory bandwidth, leading to slower data transfer and computational performance. -Higher Cost: CPUs are generally more expensive than GPUs for the same level of deep learning performance.

Example: Let’s consider training a deep neural network for image recognition. The model requires extensive matrix computations to adjust the millions of weights during training. A GPU can perform these computations in parallel, significantly speeding up the training process. In contrast, a CPU would struggle to keep up with the same computations due to its limited parallel processing capabilities, resulting in slower training times. Therefore, for deep learning tasks, a GPU offers a more efficient and cost-effective solution compared to a CPU.

References

Twitter Facebook LinkedIn

Comments